|

|

Lecture notes, cheat sheets

Database. Lecture notes: briefly, the most important

Directory / Lecture notes, cheat sheets Table of contents

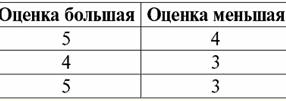

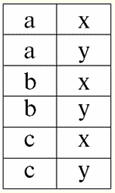

Lecture No. 1. Introduction 1. Database management systems Database management systems (DBMS) are specialized software products that allow: 1) permanently store arbitrarily large (but not infinite) amounts of data; 2) extract and modify these stored data in one way or another, using so-called queries; 3) create new databases, i.e. describe logical data structures and set their structure, i.e. provide a programming interface; 4) access stored data by several users at the same time (i.e. provide access to the transaction management mechanism). Accordingly, the Database are datasets under the control of management systems. Now database management systems are the most complex software products on the market and form its basis. In the future, it is planned to conduct developments on a combination of conventional database management systems with object-oriented programming (OOP) and Internet technologies. Initially, DBMS were based on hierarchical и network data models, i.e. allowed to work only with tree and graph structures. In the process of development in 1970, there were database management systems proposed by Codd (Codd), based on relational data model. 2. Relational databases The term "relational" comes from the English word "relation" - "relationship". In the most general mathematical sense (as can be remembered from the classical set algebra course) respect - it's a set R = {(x1,..., xn) | x1 ∈ A1, ..., xn ∈ An}, where A1,...,An are the sets forming the Cartesian product. In this way, ratio R is a subset of the Cartesian product of sets: A1 x... x An : R ⊆ A 1 x... x An. For example, consider the binary relations of the strict order "greater than" and "less than" on the set of ordered pairs of numbers A 1 = A2 = {3, 4, 5}: R> = {(3, 4), (4, 5), (3, 5)} ⊂ A1 xA2; R< = {(5, 4), (4, 3), (5, 3)} ⊂ A1 xA2. These relationships can be presented in the form of tables. Ratio "greater than">:

Ratio "less than" R<:

Thus, we see that in relational databases, a wide variety of data is organized in the form of relationships and can be presented in the form of tables. It should be noted that these two relations R> and R< are not equivalent to each other, in other words, the tables corresponding to these relationships are not equal to each other. So, the forms of data representation in relational databases can be different. How does this possibility of different representation manifest itself in our case? Relations R> and R< - these are sets, and a set is an unordered structure, which means that in tables corresponding to these relationships, rows can be interchanged. But at the same time, the elements of these sets are ordered sets, in our case - ordered pairs of numbers 3, 4, 5, which means that the columns cannot be interchanged. Thus, we have shown that the representation of a relation (in the mathematical sense) as a table with an arbitrary order of rows and a fixed number of columns is an acceptable, correct form of representation of relations. But if we consider the relations R> and R< from the point of view of the information embedded in them, it is clear that they are equivalent. Therefore, in relational databases, the concept of "relationship" has a slightly different meaning than a relation in general mathematics. Namely, it is not related to the ordering by columns in a tabular form of presentation. Instead, so-called "row - column heading" relationship schemes are introduced, i.e., each column is given a heading, after which they can be freely swapped. This is what our R relationships will look like> and R< in a relational database. A strict order relation (instead of the relation R>):

A strict order relation (instead of the relation R<):

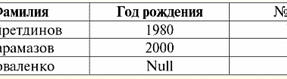

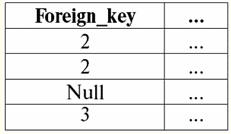

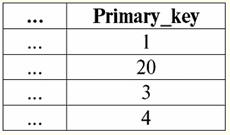

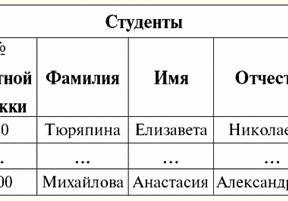

Both tables-relationships get a new one (in this case, the same, since by introducing additional headers we have erased the differences between the relations R> and R<) title. So, we see that with the help of such a simple trick as adding the necessary headers to the tables, we come to the fact that the relations R> and R< become equivalent to each other. Thus, we conclude that the concept of "relationship" in the general mathematical and relational sense does not completely coincide, they are not identical. Currently, relational database management systems form the basis of the information technology market. Further research is being carried out in the direction of combining varying degrees of the relational model. Lecture #2. Missing Data Two types of values are described in database management systems for detecting missing data: empty (or Empty-values) and undefined (or Null-values). In some (mostly commercial) literature, Null values are sometimes referred to as empty or null values, but this is incorrect. The meaning of the empty and indefinite meanings is fundamentally different, so it is necessary to carefully monitor the context of the use of a particular term. 1. Empty values (Empty-values) empty value is just one of many possible values for some well-defined data type. We list the most "natural", immediate empty values (i.e. empty values that we could allocate on our own without having any additional information): 1) 0 (zero) - null value is empty for numeric data types; 2) false (wrong) - is an empty value for a boolean data type; 3) B'' - empty bit string for variable length strings; 4) "" - empty string for character strings of variable length. In the cases above, you can determine whether a value is null or not by comparing the existing value with the null constant defined for each data type. But database management systems, due to the schemes implemented in them for long-term data storage, can only work with strings of constant length. Because of this, an empty string of bits can be called a string of binary zeros. Or a string consisting of spaces or any other control characters is an empty string of characters. Here are some examples of constant length empty strings: 1) B'0'; 2) B'000'; 3) ' '. How can you tell if a string is empty in these cases? In database management systems, a logical function is used to test for emptiness, that is, the predicate IsEmpty(<expression>), which literally means "eat empty". This predicate is usually built into the database management system and can be applied to any type of expression. If there is no such predicate in database management systems, then you can write a logical function yourself and include it in the list of objects of the database being designed. Consider another example where it is not so easy to determine whether we have an empty value. Date type data. Which value in this type should be considered an empty value if the date can vary in the range from 01.01.0100. before 31.12.9999/XNUMX/XNUMX? To do this, a special designation is introduced into the DBMS for empty date constants {...}, if the value of this type is written: {DD. MM. YY} or {YY. MM. DD}. With this value, a comparison occurs when checking the value for emptiness. It is considered to be a well-defined, "full" value of an expression of this type, and the smallest possible one. When working with databases, null values are often used as default values or are used when expression values are missing. 2. Undefined values (Null values) Word zero used to denote undefined values in databases. To better understand what values are understood as null, consider a table that is a fragment of a database:

So, undefined value or Null value - this: 1) unknown, but usual, i.e. applicable value. For example, Mr. Khairetdinov, who is number one in our database, undoubtedly has some passport data (like a person born in 1980 and a citizen of the country), but they are not known, therefore, they are not included in the database. Therefore, the Null value will be written to the corresponding column of the table; 2) not applicable value. Mr. Karamazov (No. 2 in our database) simply cannot have any passport data, because at the time of the creation of this database or the entry of data into it, he was a child; 3) the value of any cell of the table, if we cannot say whether it is applicable or not. For example, Mr. Kovalenko, who occupies the third position in our database, does not know the year of birth, so we cannot say with certainty whether he has or does not have passport data. And consequently, the values of two cells in the line dedicated to Mr. Kovalenko will be Null-value (the first - as unknown in general, the second - as a value whose nature is unknown). Like any other data type, Null values also have certain properties. We list the most significant of them: 1) over time, the understanding of the Null value may change. For example, for Mr. Karamazov (No. 2 in our database) in 2014, i.e., upon reaching the age of majority, the Null-value will change to some specific, well-defined value; 2) A null value can be assigned to a variable or constant of any type (numeric, string, boolean, date, time, etc.); 3) the result of any operations on expressions with Null-values as operands is a Null-value; 4) an exception to the previous rule are the operations of conjunction and disjunction under the conditions of the laws of absorption (for more details on the laws of absorption, see paragraph 4 of lecture No. 2). 3. Null values and the general rule for evaluating expressions Let's talk more about actions on expressions containing Null values. The general rule for dealing with Null values (that the result of operations on Null values is a Null value) applies to the following operations: 1) to arithmetic; 2) to bitwise negation, conjunction and disjunction operations (except for absorption laws); 3) to operations with strings (for example, concatenation - concatenation of strings); 4) to comparison operations (<, ≤, ≠, ≥, >). Let's give examples. As a result of applying the following operations, Null values will be obtained: 3 + Null, 1/ Null, (Ivanov' + '' + Null) ≔ Null Here, instead of the usual equality, we use substitution operation "≔" due to the special nature of working with Null values. In the following, this character will also be used in similar situations, which means that the expression to the right of the wildcard character can replace any expression from the list to the left of the wildcard character. The nature of Null values often results in some expressions producing a Null value instead of the expected null, for example: (x - x), y * (x - x), x * 0 ≔ Null when x = Null. The thing is that when substituting, for example, the value x = Null into the expression (x - x), we get the expression (Null - Null), and the general rule for calculating the value of the expression containing Null values comes into force, and information about the fact that here the Null value corresponds to the same variable is lost. We can conclude that when calculating any operations other than logical ones, Null values are interpreted as inapplicable, and so the result is also a Null value. The use of Null values in comparison operations leads to no less unexpected results. For example, the following expressions also produce Null values instead of the expected Boolean True or False values: (Null < Null); (Null ≤ null); (Null = Null); (Null ≠ Null); (Null > Null); (Null ≥ Null) ≔ Null; Thus, we conclude that it is impossible to say that a Null value is equal or not equal to itself. Each new occurrence of a Null value is treated as independent, and each time the Null values are treated as different unknown values. In this, Null values are fundamentally different from all other data types, because we know that it was safe to say about all the values \uXNUMXb\uXNUMXbpassed earlier and their types that they are equal or not equal to each other. So we see that Null values are not the values of variables in the usual sense of the word. Therefore, it becomes impossible to compare the values of variables or expressions containing Null values, since as a result we will receive not the boolean True or False values, but Null values, as in the following examples: (x < Null); (x ≤ null); (x=Null); (x ≠ Null); (x > Null); (x ≥ Null) ≔ Null; Therefore, by analogy with empty values, to check an expression for Null values, you must use a special predicate: IsNull(<expression>), which literally means "is Null". The Boolean function returns True if the expression contains Null or equals Null, and False otherwise, but never returns Null. The IsNull predicate can be applied to variables and expressions of any type. When applied to expressions of the empty type, the predicate will always return False. For example:

So, indeed, we see that in the first case, when the IsNull predicate was taken from zero, the output turned out to be False. In all cases, including the second and third, when the arguments of the logical function turned out to be equal to the Null value, and in the fourth case, when the argument itself was initially equal to the Null value, the predicate returned True. 4. Null values and logical operations Typically, only three logical operations are directly supported in database management systems: negation ¬, conjunction &, and disjunction ∨. The operations of succession ⇒ and equivalence ⇔ are expressed in terms of them using substitutions: (x ⇒ y) ≔ (¬x ∨ y); (x ⇔ y) ≔ (x ⇒ y) & (y ⇒ x); Note that these substitutions are fully preserved when using Null values. Interestingly, using the negation operator "¬" any of the operations conjunction & or disjunction ∨ can be expressed one through the other as follows: (x & y) ≔¬ (¬x ∨¬y); (x ∨ y) ≔ ¬(¬x & ¬y); These substitutions, as well as the previous ones, are not affected by Null-values. And now we will give the truth tables of the logical operations of negation, conjunction and disjunction, but in addition to the usual True and False values, we also use the Null value as operands. For convenience, we introduce the following notation: instead of True, we will write t, instead of False - f, and instead of Null - n. 1. Denial xx.

It is worth noting the following interesting points regarding the negation operation using Null values: 1) ¬¬x ≔ x - the law of double negation; 2) ¬Null ≔ Null - The Null value is a fixed point. 2. Conjunction x & y.

This operation also has its own properties: 1) x & y ≔ y & x - commutativity; 2) x & x ≔ x - idempotence; 3) False & y ≔ False, here False is an absorbing element; 4) True & y ≔ y, here True is the neutral element. 3. Disjunction x ∨ y.

Features: 1) x ∨ y ≔ y ∨ x - commutativity; 2) x ∨ x ≔ x - idempotency; 3) False ∨ y ≔ y, here False is the neutral element; 4) True ∨ y ≔ True, here True is an absorbing element. An exception to the general rule is the rules for calculating the logical operations conjunction & and disjunction ∨ under the conditions of action absorption laws: (False & y) ≔ (x & False) ≔ False; (True ∨ y) ≔ (x ∨ True) ≔ True; These additional rules are formulated so that when replacing a Null value with False or True, the result would still not depend on this value. As previously shown for other types of operations, using Null values in Boolean operations can also result in unexpected values. For example, the logic at first glance is broken in the law of the exclusion of the third (x ∨ ¬x) and the law of reflexivity (x = x), since for x ≔ Null we have: (x ∨ ¬x), (x = x) ≔ Null. Laws are not enforced! This is explained in the same way as before: when a Null value is substituted into an expression, the information that this value is reported by the same variable is lost, and the general rule for working with Null values comes into force. Thus, we conclude: when performing logical operations with Null values as an operand, these values are determined by database management systems as applicable but unknown. 5. Null values and condition checking So, from the above, we can conclude that in the logic of database management systems there are not two logical values (True and False), but three, because the Null value is also considered as one of the possible logical values. That is why it is often referred to as the unknown value, the Unknown value. However, despite this, only two-valued logic is implemented in database management systems. Therefore, a condition with a Null value (an undefined condition) must be interpreted by the machine as either True or False. By default, the DBMS language recognizes a condition with a Null value as False. We illustrate this with the following examples of the implementation of conditional If and While statements in database management systems: If P then A else B; This entry means: if P evaluates to True, then action A is performed, and if P evaluates to False or Null, then action B is performed. Now we apply the negation operation to this operator, we get: If ¬P then B else A; In turn, this operator means the following: if ¬P evaluates to True, then action B is performed, and if ¬P evaluates to False or Null, then action A will be performed. And again, as we can see, when a Null value appears, we encounter unexpected results. The point is that the two If statements in this example are not equivalent! Although one of them is obtained from the other by negating the condition and rearranging the branches, that is, by a standard operation. Such operators are generally equivalent! But in our example, we see that the null value of the condition P in the first case corresponds to the command B, and in the second - to A. Now consider the action of the while conditional statement: While P do A; B; How does this operator work? As long as P is True, action A will be executed, and as soon as P is False or Null, action B will be executed. But Null values are not always interpreted as False. For example, in integrity constraints, undefined conditions are recognized as True (integrity constraints are conditions that are imposed on the input data and ensure their correctness). This is because in such constraints only deliberately false data should be rejected. And again, in database management systems, there is a special substitution function IfNull(integrity constraints, True), with which Null values and undefined conditions can be represented explicitly. Let's rewrite the conditional If and While statements using this function: 1) If IfNull ( P, False) then A else B; 2) While IfNull( P, False) do A; B; So, the substitution function IfNull(expression 1, expression 2) returns the value of the first expression if it does not contain a Null value, and the value of the second expression otherwise. It should be noted that no restrictions are imposed on the type of the expression returned by the IfNull function. Therefore, using this function, you can explicitly override any rules for working with Null values. Lecture #3. Relational Data Objects 1. Requirements for the tabular form of representation of relations 1. The very first requirement for the tabular form of the representation of relations is finiteness. Working with infinite tables, relationships, or any other representations and data organizations is inconvenient, rarely justifies the effort expended, and, moreover, this direction has little practical application. But besides this, quite expected, there are other requirements. 2. The heading of the table representing the relationship must necessarily consist of one line - the heading of the columns, and with unique names. Multi-level headers are not allowed. For example, these:

All multi-tier headings are replaced by single-tier headings by selecting suitable headings. In our example, the table after the specified transformations will look like this:

We see that the name of each column is unique, so they can be swapped as you like, i.e. their order becomes irrelevant. And this is very important because it is the third property. 3. The order of the lines should not be significant. However, this requirement is also not strictly restrictive, since any table can be easily reduced to the required form. For example, you can enter an additional column that will determine the order of the rows. In this case, nothing will change from rearranging the lines either. Here is an example of such a table:

4. There should be no duplicate rows in the table representing the relationship. If there are duplicate rows in the table, this can be easily fixed by introducing an additional column responsible for the number of duplicates of each row, for example:

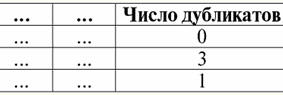

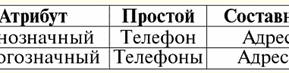

The following property is also quite expected, because it underlies all the principles of programming and designing relational databases. 5. Data in all columns must be of the same type. And besides, they must be of a simple type. Let us explain what simple and complex data types are. A simple data type is one whose data values are non-composite, that is, they do not contain constituent parts. Thus, neither lists, nor arrays, nor trees, nor similar composite objects should be present in the columns of the table. Such objects are composite data type - in relational database management systems, they themselves are presented in the form of independent tables-relations. 2. Domains and attributes Domains and attributes are basic concepts in the theory of creating and managing databases. Let's explain what it is. Formally, attribute domain (denoted by dom(a)), where a is an attribute, is defined as the set of valid values of the same type of the corresponding attribute a. This type must be simple, i.e.: dom(a) ⊆ {x | type(x) = type(a)}; Attribute (denoted a) is in turn defined as an ordered pair consisting of the attribute name name(a) and the attribute domain dom(a), i.e.: a = (name(a): dom(a)); This definition uses ":" instead of the usual "," (as in standard ordered pair definitions). This is done to emphasize the association of the attribute's domain and the attribute's data type. Here are some examples of different attributes: а1 = (Course: {1, 2, 3, 4, 5}); а2 = (MassaKg: {x | type(x) = real, x 0}); а3 = (LengthSm: {x | type(x) = real, x 0}); Note that the attributes a2 and a3 domains formally match. But the semantic meaning of these attributes is different, because comparing the values of mass and length is meaningless. Therefore, an attribute domain is associated not only with the type of valid values, but also with a semantic meaning. In the tabular form of a relationship, the attribute is displayed as a column heading in the table, and the domain of the attribute is not specified, but is implied. It looks like this:

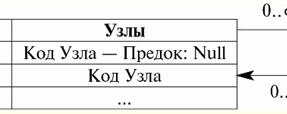

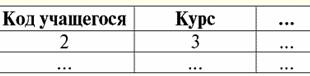

It is easy to see that here each of the headers a1,2,3 columns of a table representing a relationship is a separate attribute. 3. Schemes of relationships. Named value tuples In the theory and practice of DBMS, the concepts of a relation schema and a named value of a tuple on an attribute are basic. Let's bring them. relation scheme (denoted by S) is defined as a finite set of attributes with unique names, i.e.: S = {a | a ∈ S}; In each table that represents a relation, all column headings (all attributes) are combined into the relation's schema. The number of attributes in a relationship schema determines power it relations and is denoted as the cardinality of the set: |S|. A relationship schema may be associated with a relationship schema name. In a tabular form of relationship representation, as you can easily see, the relationship schema is nothing more than a row of column headings.

Y = {a1,2,3,4} - relationship schema of this table. The relation name is displayed as a schematic heading of the table. In text form, the relationship schema can be represented as a named list of attribute names, for example: Students (classbook number, last name, first name, patronymic, date of birth). Here, as in the tabular form, attribute domains are not specified but implied. It follows from the definition that the schema of a relation can also be empty (S = ∅). True, this is possible only in theory, since in practice the database management system will never allow the creation of an empty relationship schema. Named tuple value on attribute (denoted by t(a)) is defined by analogy with an attribute as an ordered pair consisting of an attribute name and an attribute value, i.e.: t(a) = (name(a) : x), x ∈ dom(a); We see that the attribute value is taken from the attribute domain. In the tabular form of a relation, each named value of a tuple on an attribute is a corresponding table cell:

Here t(a1), t(a2), t(a3) - named values of tuple t on attributes a1,2,3. The simplest examples of named tuple values on attributes: (Course: 5), (Score: 5); Here Course and Score are the names of two attributes, respectively, and 5 is one of their values taken from their domains. Of course, although these values are equal in both cases, they are semantically different, since the sets of these values in both cases differ from each other. 4. Tuples. Tuple types The concept of a tuple in database management systems can be intuitively found already from the previous point, when we talked about the named value of a tuple on various attributes. So, tuple (denoted by t, from English. tuple - "tuple") with relation scheme S is defined as the set of named values of this tuple on all attributes included in this relation scheme S. In other words, attributes are taken from scope of a tuple, def(t), i.e.: t ≡ t(S) = {t(a) | a ∈ def(t) ⊆ S;. It is important that no more than one attribute value must correspond to one attribute name. In the tabular form of the relationship, a tuple will be any row of the table, i.e.:

Here t1(S) = {t(a1), t(a2), t(a3), t(a4)} and t2(S) = {t(a5), t(a6), t(a7), t(a8)} - tuples. Tuples in the DBMS differ in types depending on its domain of definition. The tuples are called: 1) partial, if their domain of definition is included or coincides with the schema of the relation, i.e. def(t) ⊆ S. This is a common case in database practice; 2) complete, in the event that their domain of definition completely coincides, is equal to the relation scheme, i.e. def(t) = S; 3) incomplete, if the domain of definition is completely included in the scheme of relations, i.e. def(t) ⊂ S; 4) nowhere defined, if their domain of definition is equal to the empty set, i.e. def(t) = ∅. Let's explain with an example. Let's say we have a relationship given by the following table.

Let here t1 = {10, 20, 30}, t2 = {10, 20, Null}, t3 = {Null, Null, Null}. Then it is easy to see that the tuple t1 - complete, since its domain of definition is def(t1) = {a, b, c} = S. Tuple t2 - incomplete, def(t2) = { a, b} ⊂ S. Finally, the tuple t3 - not defined anywhere, since its def(t3) = ∅. It should be noted that a tuple not defined anywhere is an empty set, nevertheless associated with a relation scheme. Sometimes a nowhere-defined tuple is denoted: ∅(S). As we have already seen in the above example, such a tuple is a table row consisting only of Null values. Interestingly, the comparable, that is, possibly equal, are only tuples with the same relationship schema. Therefore, for example, two nowhere-defined tuples with different relationship schemes will not be equal, as might be expected. They will be different just like their relationship patterns. 5. Relationships. Relationship types And finally, let's define the relationship as a kind of top of the pyramid, consisting of all the previous concepts. So, respect (denoted by r, from English. relation) with relation schema S is defined as a necessarily finite set of tuples having the same relation schema S. Thus: r ≡ r(S) = {t(S) | t ∈r}; By analogy with relation schemes, the number of tuples in a relation is called relationship power and denoted as the cardinality of the set: |r|. Relations, like tuples, differ in types. So the relationship is called: 1) partial, if the following condition is satisfied for any tuple in the relation: [def(t) ⊆ S]. This is (as with tuples) the general case; 2) complete, in case if ∀t ∈ r(S) we have [def(t) = S]; 3) incomplete, if ∃t ∈ r(S) def(t) ⊂ S; 4) nowhere defined, if ∀t ∈ r(S) [def(t) = ∅]. Let us pay special attention to nowhere defined relations. Unlike tuples, working with such relationships involves a bit of subtlety. The point is that nowhere defined relations can be of two types: they can either be empty, or they can contain a single nowhere defined tuple (such relations are denoted by {∅(S)}). comparable (by analogy with tuples), i.e., possibly equal, are only relations with the same relation schema. Therefore, relationships with different relationship patterns are different. In a tabular form, a relation is the body of the table, to which the line - the heading of the columns, i.e. literally - the entire table, along with the first row containing the headings, corresponds. Lecture No. 4. Relational algebra. Unary operations Relational algebra, as you might guess, is a special type of algebra in which all operations are performed on relational data models, i.e., on relationships. In tabular terms, a relationship includes rows, columns, and a row - the heading of the columns. Therefore, natural unary operations are operations of selecting certain rows or columns, as well as changing column headers - renaming attributes. 1. Unary selection operation The first unary operation we will look at is fetch operation - the operation of selecting rows from a table representing a relation, according to some principle, i.e., selecting rows-tuples that satisfy a certain condition or conditions. Fetch Operator denoted by σ , sampling condition - P , i.e., the operator σ is always taken with a certain condition on the tuples P, and the condition P itself is written depending on the scheme of the relation S. Taking into account all this, the fetch operation over the scheme of the relation S in relation to the relation r will look like this: σ r(S) ≡ σ r = {t(S) |t ∈ r & P t} = {t(S) |t ∈ r & IfNull(P t, False}; The result of this operation will be a new relation with the same relation schema S, consisting of those tuples t(S) of the original relation-operand that satisfy the selection condition P t. It is clear that in order to apply some kind of condition to a tuple, it is necessary to substitute the values of the tuple attributes instead of the attribute names. To better understand how this operation works, let's look at an example. Let the following relation scheme be given: S: Session (Gradebook No., Surname, Subject, Grade). Let's take the selection condition as follows: P = (Subject = ‘Computer Science’ and Assessment > 3). We need to extract from the initial relation-operand those tuples that contain information about students who passed the subject "Computer Science" by at least three points. Let also be given the following tuple from this relation: t0(S) ∈ r(S): {(Gradebook #: 100), (Surname: 'Ivanov'), (Subject: 'Databases'), (Score: 5)}; Applying our selection condition to the tuple t0, we get: P t0 = ('Databases' = 'Informatics' and 5 > 3); On this particular tuple, the selection condition is not met. In general, the result of this particular sample σ<Subject = 'Computer Science' and Grade > 3 > Session there will be a "Session" table, in which rows are left that satisfy the selection condition. 2. Unary projection operation Another standard unary operation that we will study is the projection operation. Projection operation is the operation of selecting columns from a table representing a relation, according to some attribute. Namely, the machine chooses those attributes (that is, literally those columns) of the original operand relation that were specified in the projection. projection operator denoted by [S'] or π . Here S' is a subschema of the original schema of relation S, i.e. some of its columns. What does this mean? This means that S' has fewer attributes than S, because only those attributes remained in S' for which the projection condition was satisfied. And in the table representing the relation r(S' ), there are as many rows as there are in the table r(S), and there are fewer columns, since only those corresponding to the remaining attributes remain. Thus, the projection operator π< S'> applied to the relation r(S) results in a new relation with a different relation scheme r(S' ), consisting of projections t(S) [S' ] of tuples of the original relation. How are these tuple projections defined? Projection of any tuple t(S) of the original relation r(S) to the subcircuit S' is determined by the following formula: t(S) [S'] = {t(a)|a ∈ def(t) ∩ S'}, S' ⊆S. It is important to note that duplicate tuples are excluded from the result, i.e. there will be no duplicate rows in the table representing the new one. With all of the above in mind, a projection operation in terms of database management systems would look like this: π r(S) ≡ π r ≡ r(S) [S'] ≡ r [S' ] = {t(S) [S'] | t ∈ r}; Let's look at an example illustrating how the fetch operation works. Let the relation "Session" and the scheme of this relation be given: S: Session (classbook number, Surname, Subject, Grade); We will be interested in only two attributes from this scheme, namely the student's "Gradebook #" and "Last Name", so the S' subschema will look like this: S': (Record book number, Surname). We need to project the initial relation r(S) onto the subcircuit S'. Next, let us be given a tuple t0(S) from the original relation: t0(S) ∈ r(S): {(Gradebook #: 100), (Surname: 'Ivanov'), (Subject: 'Databases'), (Score: 5)}; Hence, the projection of this tuple onto the given subcircuit S' will look like this: t0(S) S': {(Account book number: 100), (Surname: 'Ivanov')}; If we talk about the projection operation in terms of tables, then the projection Session [gradebook number, Last name] of the original relation is the Session table, from which all columns are deleted, except for two: gradebook number and Last name. In addition, all duplicate lines have also been removed. 3. Unary renaming operation And the last unary operation we'll look at is attribute renaming operation. If we talk about the relationship as a table, then the renaming operation is needed in order to change the names of all or some of the columns. rename operator looks like this: ρ<φ>, here φ - rename function. This function establishes a one-to-one correspondence between schema attribute names S and Ŝ, where respectively S is the schema of the original relation and Ŝ is the schema of the relation with renamed attributes. Thus, the operator ρ<φ> applied to the relation r(S) gives a new relation with the schema Ŝ, consisting of tuples of the original relation with only renamed attributes. Let's write the operation of renaming attributes in terms of database management systems: ρ<φ> r(S) ≡ ρ<φ>r = {ρ<φ> t(S)| t ∈ r}; Here is an example of using this operation: Let's consider the relation Session already familiar to us, with the scheme: S: Session (classbook number, Surname, Subject, Grade); Let's introduce a new relationship schema Ŝ, with different attribute names that we would like to see instead of the existing ones: Ŝ : (No. ZK, Surname, Subject, Score); For example, a database customer wanted to see other names in your out-of-the-box relation. To implement this order, you need to design the following rename function: φ : (number of record book, Surname, Subject, Grade) → (No. ZK, Surname, Subject, Score); In fact, only two attributes need to be renamed, so it's legal to write the following rename function instead of the current one: φ : (number of record book, Grade) → (No. ZK, Score); Further, let the already familiar tuple belonging to the Session relation be also given: t0(S) ∈ r(S): {(Gradebook #: 100), (Surname: 'Ivanov'), (Subject: 'Databases'), (Score: 5)}; Apply the rename operator to this tuple: ρ<φ>t0(S): {(ZK #: 100), (Surname: 'Ivanov'), (Subject: 'Databases'), (Score: 5)}; So, this is one of the tuples of our relation, whose attributes have been renamed. In tabular terms, the ratio ρ < Gradebook number, Grade → "No. ZK, Score > Session - this is a new table obtained from the "Session" relationship table by renaming the specified attributes. 4. Properties of unary operations Unary operations, like any other, have certain properties. Let's consider the most important of them. The first property of the unary selection, projection, and renaming operations is the property that characterizes the ratio of the cardinalities of the relations. (Recall that the cardinality is the number of tuples in one or another relation.) It is clear that here we are considering, respectively, the initial relation and the relation obtained as a result of applying one or another operation. Note that all properties of unary operations follow directly from their definitions, so they can be easily explained and even, if desired, deduced independently. So: 1) power ratio: a) for the selection operation: | σ r |≤ |r|; b) for the projection operation: | r[S'] | ≤ |r|; c) for the renaming operation: | ρ<φ>r | = |r|; In total, we see that for two operators, namely for the selection operator and the projection operator, the power of the original relations - operands is greater than the power of the relations obtained from the original ones by applying the corresponding operations. This is because the selection accompanying these two select and project operations excludes some rows or columns that do not satisfy the selection conditions. In the case when all rows or columns satisfy the conditions, there is no decrease in power (i.e., the number of tuples), so the inequality in the formulas is not strict. In the case of the renaming operation, the power of the relation does not change, due to the fact that when changing names, no tuples are excluded from the relation; 2) idempotent property: a) for the sampling operation: σ σ r = σ ; b) for the projection operation: r [S'] [S'] = r [S']; c) for the renaming operation, in the general case, the property of idempotency is not applicable. This property means that applying the same operator twice in succession to any relation is equivalent to applying it once. For the operation of renaming relation attributes, generally speaking, this property can be applied, but with special reservations and conditions. The property of idempotency is very often used to simplify the form of an expression and bring it to a more economical, actual form. And the last property we will consider is the property of monotonicity. It is interesting to note that under any conditions all three operators are monotonic; 3) monotonicity property: a) for a fetch operation: r1 ⊆ r2 ⇒σ r1 ⇒ σ r2; b) for the projection operation: r1 ⊆ r2 ⇒ r1[S'] ⊆ r2 [S']; c) for the rename operation: r1 ⊆ r2 ⇒ ρ<φ>r1 ⊆ ρ<φ>r2; The concept of monotonicity in relational algebra is similar to the same concept from ordinary, general algebra. Let us clarify: if initially the relations r1 and r2 were related to each other in such a way that r ⊆ r2, then even after applying any of the three selection, projection, or renaming operators, this relation will be preserved. Lecture No. 5. Relational algebra. Binary Operations 1. Operations of union, intersection, difference Any operations have their own applicability rules that must be observed so that expressions and actions do not lose their meaning. The binary set-theoretic operations of union, intersection, and difference can only be applied to two relations necessarily with the same relation schema. The result of such binary operations will be relations consisting of tuples that satisfy the conditions of the operations, but with the same relation scheme as the operands. 1. The result union operations two relations r1(S) and r2(S) there will be a new relation r3(S) consisting of those tuples of relations r1(S) and r2(S) that belong to at least one of the original relations and with the same relationship schema. So the intersection of the two relations is: r3(S) = r1(S) r2(S) = {t(S) | t ∈r1 ∪t ∈r2}; For clarity, here is an example in terms of tables: Let two relations be given: r1(S):

r2(S):

We see that the schemes of the first and second relations are the same, only they have a different number of tuples. The union of these two relations will be the relation r3(S), which will correspond to the following table: r3(S) = r1(S) r2(S):

So, the schema of relation S has not changed, only the number of tuples has increased. 2. Let's move on to the consideration of the next binary operation - intersection operations two relationships. As we know from school geometry, the resulting relation will include only those tuples of the original relations that are present simultaneously in both relations r1(S) and r2(S) (again, note the same relationship pattern). The operation of the intersection of two relations will look like this: r4(S) = r1(S)∩r2(S) = {t(S) | t ∈ r1 & t ∈ r2}; And again, consider the effect of this operation on relations presented in the form of tables: r1(S):

r2(S):

According to the definition of the operation by the intersection of relations r1(S) and r2(S) there will be a new relation r4(S), whose table view would look like this: r4(S) = r1(S)∩r2(S):

Indeed, if we look at the tuples of the first and second initial relations, there is only one common among them: {b, 2}. It became the only tuple of the new relation r4(S). 3. Difference operation two relations is defined in a similar way to the previous operations. Operand relations, as in the previous operations, must have the same relation schemes, then the resulting relation will include all those tuples of the first relation that are not in the second, i.e.: r5(S) = r1(S)\r2(S) = {t(S) | t ∈ r1 & t ∉ r2}; The already well-known relations r1(S) and r2(S), in a tabular view looking like this: r1(S):

r2(S):

We will consider both operands in the operation of the intersection of two relations. Then, following this definition, the resulting relation r5(S) will look like this: r5(S) = r1(S)\r2(S):

The considered binary operations are basic, other operations, more complex, are based on them. 2. Cartesian product and natural join operations The Cartesian product operation and the natural join operation are binary operations of the product type and are based on the union of two relations operation that we discussed earlier. Although the action of the Cartesian product operation may seem familiar to many, we will nevertheless begin with the natural product operation, since it is a more general case than the first operation. So, consider the natural join operation. It should be immediately noted that the operands of this action can be relations with different schemes, in contrast to the three binary operations of union, intersection and renaming. If we consider two relations with different relation schemes r1(S1) and r2(S2), then their natural compound there will be a new relation r3(S3), which will consist only of those tuples of operands that match at the intersection of relationship schemes. Accordingly, the scheme of the new relationship will be larger than any of the schemes of relations of the original ones, since it is their connection, "gluing". By the way, tuples that are identical in two operand relations, according to which this "gluing" occurs, are called connectable. Let's write the definition of the natural join operation in the formula language of database management systems: r3(S3) = r1(S1)xr2(S2) = {t(S1 ∪S2) | t[S1] ∈ r1 &t(S2) ∈ r2}; Let's consider an example that well illustrates the work of a natural connection, its "gluing". Let two relations r1(S1) and r2(S2), in the tabular form of representation, respectively, equal: r1(S1):

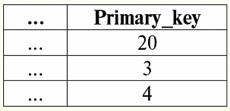

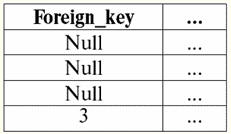

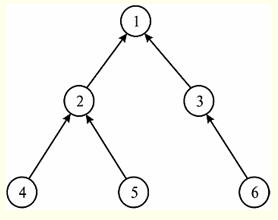

r2(S2):

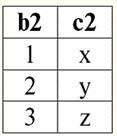

We see that these relations have tuples that coincide at the intersection of schemes S1 and S2 relations. Let's list them: 1) tuple {a, 1} of relation r1(S1) matches the tuple {1, x} of the relation r2(S2); 2) tuple {b, 1} from r1(S1) also matches the tuple {1, x} from r2(S2); 3) the tuple {c, 3} matches the tuple {3, z}. Hence, under natural join, the new relation r3(S3) is obtained by "gluing" exactly on these tuples. So r3(S3) in a table view will look like this: r3(S3) = r1(S1)xr2(S2):

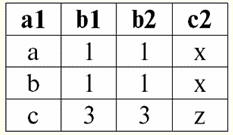

It turns out by definition: scheme S3 does not coincide with the scheme S1, nor with the scheme S2, we "glued" the two original schemas by intersecting tuples to get their natural join. Let us show schematically how tuples are joined when applying the natural join operation. Let the relation r1 has a conditional form:

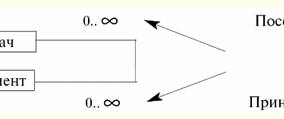

And the ratio r2 - view:

Then their natural connection would look like this:

We see that the "gluing" of relations-operands occurs according to the same scheme that we gave earlier, considering the example. Operation Cartesian connection is a special case of the natural join operation. More specifically, when considering the effect of the operation of the Cartesian product on relations, we deliberately stipulate that in this case we can only talk about non-intersecting relation schemes. As a result of applying both operations, relations with schemas equal to the union of schemas of operand relations are obtained, only all possible pairs of their tuples fall into the Cartesian product of two relations, since the schemas of operands should in no case intersect. Thus, based on the foregoing, we write a mathematical formula for the Cartesian product operation: r4(S4) = r1(S1)xr2(S2) = {t(S1 ∪S2) | t[S1] ∈ r1 &t(S2) ∈ r2}, S1 S2= ∅; Now let's look at an example to show how the resulting relation schema will look when applying the Cartesian product operation. Let two relations r1(S1) and r2(S2), which are presented in tabular form as follows: r1(S1):

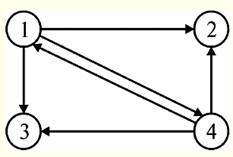

r2(S2):

So we see that none of the tuples of relations r1(S1) and r2(S2), indeed, does not coincide in their intersection. Therefore, in the resulting relation r4(S4) all possible pairs of tuples of the first and second operand relations will fall. Get: r4(S4) = r1(S1)xr2(S2):

We have obtained a new relation scheme r4(S4) not by "gluing" tuples as in the previous case, but by enumeration of all possible different pairs of tuples that do not match in the intersection of the original schemes. Again, as in the case of natural join, we give a schematic example of the operation of the Cartesian product operation. Let r1 set as follows:

And the ratio r2 given:

Then their Cartesian product can be schematically represented as follows:

It is in this way that the resulting relation is obtained when applying the operation of the Cartesian product. 3. Properties of binary operations From the above definitions of the binary operations of union, intersection, difference, Cartesian product, and natural join, properties follow. 1. The first property, as in the case of unary operations, illustrates power ratio relations: 1) for the union operation: |r1 ∪r2| ≤ |r1| + |r2|; 2) for the intersection operation: |r1 ∩r2 | ≤ min(|r1|, |r2|); 3) for the difference operation: |r1 \r2| ≤ |r1|; 4) for the Cartesian product operation: |r1 xr2| = |r1| |r2|; 5) for natural join operation: |r1 xr2| ≤ |r1| |r2|. The ratio of powers, as we remember, characterizes how the number of tuples in relations changes after applying one or another operation. So what do we see? Power association two relations r1 and r2 less than the sum of the cardinalities of the original operand relations. Why is this happening? The thing is that when you merge, matching tuples disappear, overlapping each other. So, referring to the example that we considered after going through this operation, you can see that in the first relation there were two tuples, in the second - three, and in the resulting - four, i.e. less than five (the sum of the cardinalities of the relations-operands ). By the matching tuple {b, 2}, these relations are "glued together". Result Power intersections two relations is less than or equal to the minimum cardinality of the original operand relations. Let us turn to the definition of this operation: only those tuples that are present in both initial relations get into the resulting relation. This means that the cardinality of the new relation cannot exceed the cardinality of the relation-operand whose number of tuples is the smallest of the two. And the power of the result can be equal to this minimum cardinality, since the case is always allowed when all tuples of a relation with a lower cardinality coincide with some tuples of the second relation-operand. In case of operation differences everything is quite trivial. Indeed, if all tuples that are also present in the second relation are "subtracted" from the first relation-operand, then their number (and, consequently, their power) will decrease. In the event that not a single tuple of the first relation matches any tuple of the second relation, i.e., there is nothing to "subtract", its power will not decrease. Interestingly, if the operation Cartesian product the power of the resulting relation is exactly equal to the product of the powers of the two operand relations. It is clear that this happens because all possible pairs of tuples of the original relations are written into the result, and nothing is excluded. And finally, the operation natural connection a relation is obtained whose cardinality is greater than or equal to the product of the cardinalities of the two original relations. Again, this happens because the operand relations are "glued" by matching tuples, and non-matching ones are excluded from the result altogether. 2. Idempotency property: 1) for the union operation: r ∪ r = r; 2) for the intersection operation: r ∩ r = r; 3) for the difference operation: r \ r ≠ r; 4) for the Cartesian product operation (in the general case, the property is not applicable); 5) for the natural join operation: r x r = r. Interestingly, the property of idempotency is not true for all of the above operations, and for the operation of the Cartesian product, it is not applicable at all. Indeed, if you combine, intersect, or naturally connect any relation with itself, it will not change. But if you subtract from a relation exactly equal to it, the result will be an empty relation. 3. Commutative property: 1) for the union operation: r1 ∪r2 =r2 ∪r1; 2) for the intersection operation: r ∩ r = r ∩ r; 3) for the difference operation: r1 \r2 ≠r2 \r1; 4) for the Cartesian product operation: r1 xr2 =r2 xr1; 5) for natural join operation: r1 xr2 =r2 xr1. The commutativity property holds for all operations except for the difference operation. This is easy to understand, because their composition (tuples) does not change from rearranging relations in places. And when applying the difference operation, it is important which of the operand relations comes first, because it depends on which tuples of which relation will be taken as reference, i.e., with which tuples other tuples will be compared for exclusion. 4. Associativity property: 1) for the union operation: (r1 ∪r2)∪r3 =r1 ∪(r2 ∪r3); 2) for the intersection operation: (r1 ∩r2)∩r3 =r1 ∩(r2 ∩r3); 3) for the difference operation: (r1 \r2)\r3 ≠r1 \(r2 \r3); 4) for the Cartesian product operation: (r1 xr2)xr3 =r1 x(r2 xr3); 5) for natural join operation: (r1 xr2)xr3 =r1 x(r2 xr3). And again we see that the property is executed for all operations except for the difference operation. This is explained in the same way as in the case of applying the commutativity property. By and large, the operations of union, intersection, difference, and natural join do not care what order the operand relations are in. But when relationships are "taken away" from each other, order plays a dominant role. Based on the above properties and reasoning, the following conclusion can be drawn: the last three properties, namely the property of idempotency, commutativity and associativity, are true for all the operations we have considered, except for the operation of the difference of two relations, for which none of the three properties indicated were satisfied at all, and only in one case the property was found to be inapplicable. 4. Connection operation options Using as a basis the unary operations of selection, projection, renaming and binary operations of union, intersection, difference, Cartesian product and natural join considered earlier (all of them are generally called connection operations), we can introduce new operations derived using the above concepts and definitions. This activity is called compiling. join operation options. The first such variant of join operations is the operation inner connection according to the specified connection condition. The operation of an inner join, by some specific condition, is defined as a derivative operation from the operations of the Cartesian product and selection. We write the formula definition of this operation: r1(S1)x P r2(S2) = σ (r1 xr2), St1 S2 = ∅; Here P = P<S1 ∪S2> - a condition imposed on the union of two schemes of the original relations-operands. It is by this condition that tuples are selected from the relations r1 and r2 into the resulting relation. Note that the inner join operation can be applied to relationships with different relationship schemas. These schemes can be any, but in no case should they intersect. The tuples of the original relation-operands that are the result of the inner join operation are called joinable tuples. To visually illustrate the operation of the inner join operation, we will give the following example. Let us be given two relations r1(S1) and r2(S2) with different relationship schemes: r1(S1):

r2(S2):

The following table will give the result of applying the inner join operation on the condition P = (b1 = b2). r1(S1)x P r2(S2):

So, we see that the "gluing" of the two tables representing the relationship really happened precisely for those tuples in which the condition of the inner join operation P = (b1 = b2) is fulfilled. Now, based on the inner join operation already introduced, we can introduce the operation left outer join и right outer join. Let's explain. The result of the operation left outer join is the result of the inner join, completed with non-joinable tuples of the left source relation-operand. Similarly, the result of a right outer join operation is defined as the result of an inner join operation augmented with non-joinable tuples of the right-handed source relation-operand. The question of how the resulting relations of the operations of the left and right outer joins are replenished is quite expected. Tuples of one relation-operand are complemented on the schema of another relation-operand Null values. It is worth noting that the left and right outer join operations introduced in this way are derived operations from the inner join operation. To write down the general formulas for the left and right outer join operations, we will carry out some additional constructions. Let us be given two relations r1(S1) and r2(S2) with different schemes of relations S1 and S2, which do not intersect each other. Since we have already stipulated that the left and right inner join operations are derivatives, we can obtain the following auxiliary formulas for determining the left outer join operation: 1) r3 (S2 ∪S1) ≔ r1(S1)x Pr2(S2); r 3 (S2 ∪S1) is simply the result of the inner join of the relations r1(S1) and r2(S2). The left outer join is a derivative operation of the inner join, which is why we start our constructions with it; 2) r4(S1) ≔ r 3(S2 ∪S1) [S1]; Thus, with the help of a unary projection operation, we have selected all joinable tuples of the left initial relation-operand r1(S1). The result is designated r4(S1) for ease of use; 3) r5 (S1) ≔ r1(S1)\r4(S1); Here r1(S1) are all tuples of the left source relation-operand, and r4(S1) - its own tuples, only connected. Thus, using the binary operation of the difference, with respect to r5(S1) we got all non-joinable tuples of the left operand relation; 4) r6(S2)≔{∅(S2)}; {∅(S2)} is a new relation with the schema (S2) containing only one tuple, and made up of Null values. For convenience, we denoted this ratio as r6(S2); 5) r7 (S2 ∪S1) ≔ r5(S1)xr6(S2); Here we have taken the unconnected tuples of the left operand relation (r5(S1)) and supplemented them on the scheme of the second relation-operand S2 Null-values, i.e. Cartesian multiplied the relation consisting of these same non-joinable tuples by the relation r6(S2) defined in paragraph four; 6) r1(S1) →x P r2(S2) ≔ (r1 x P r2)∪r7 (S2 ∪S1); This is left outer join, obtained, as can be seen, by the union of the Cartesian product of the original relations-operands r1 and r2 and relations r7 (S2 ∪ S1) defined in paragraph XNUMX. Now we have all the necessary calculations to determine not only the operation of the left outer join, but by analogy and to determine the operation of the right outer join. So: 1) operation left outer join in strict form it looks like this: r1(S1) →x P r2(S2) ≔ (r1 x P r2) ∪ [(r1 \(r1 x P r2) [S1]) x {∅(S2)}]; 2) operation right outer join is defined in a similar way to the left outer join operation and has the following form: r1(S1) →x P r2(S2) ≔ (r1 x P r2) ∪ [(r2 \(r1 x P r2) [S2]) x {∅(S1)}]; These two derived operations have only two properties worth mentioning. 1. Property of commutativity: 1) for the left outer join operation: r1(S1) →x P r2(S2) ≠ r2(S2) →x P r1(S1); 2) for the right outer join operation: r1(S1) ←x P r2(S2) ≠ r2(S2) ←x P r1(S1) So, we see that the commutativity property is not satisfied for these operations in a general form, but at the same time, the operations of the left and right outer joins are mutually inverse to each other, i.e., the following is true: 1) for the left outer join operation: r1(S1) →x P r2(S2) = r2(S2) →x P r1(S1); 2) for the right outer join operation: r1(S1) ←x P r2(S2) = r2(S2) ←x Pr1(S1). 2. The main property of left and right outer join operations is that they allow reestablish the initial relation-operand according to the final result of a particular join operation, i.e., the following are performed: 1) for the left outer join operation: r1(S1) = (r1 →x P r2) [S1]; 2) for the right outer join operation: r2(S2) = (r1 ←x P r2) [S2]. Thus, we see that the first original relation-operand can be restored from the result of the left-right join operation, and more specifically, by applying to the result of this join (r1 xr2) the unary operation of projection onto the scheme S1,[S1]. And similarly, the second original relation-operand can be restored by applying the right outer join (r1 xr2) the unary operation of projection onto the scheme of relation S2. Let's give an example for a more detailed consideration of the operation of the operations of the left and right outer joins. Let us introduce the already familiar relations r1(S1) and r2(S2) with different relationship schemes: r1(S1):

r2(S2):

Nonjoinable tuple of left relation-operand r2(S2) is a tuple {d, 4}. Following the definition, it is they who should supplement the result of the internal connection of the two initial relations-operands. Inner join condition of relations r1(S1) and r2(S2) we also leave the same: P = (b1 = b2). Then the result of the operation left outer join there will be the following table: r1(S1) →x P r2(S2):

Indeed, as we can see, as a result of the impact of the operation of the left outer join, the result of the inner join operation was replenished with non-joinable tuples of the left, i.e., in our case, the first relation-operand. The replenishment of the tuple on the scheme of the second (right) source relation-operand, by definition, happened with the help of Null-values. And similar to the result right outer join by the same as before, the condition P = (b1 = b2) of the original relations-operands r1(S1) and r2(S2) is the following table: r1(S1) ←x P r2(S2):

Indeed, in this case, the result of the inner join operation should be replenished with non-joinable tuples of the right, in our case, the second initial relation-operand. Such a tuple, as it is not difficult to see, in the second relation r2(S2) one, namely {2, y}. Next, we act on the definition of the operation of the right outer join, supplement the tuple of the first (left) operand in the scheme of the first operand with Null-values. Finally, let's look at the third version of the join operations above. Full outer join operation. This operation can be considered not only as an operation derived from inner join operations, but also as a union of left and right outer join operations. Full outer join operation is defined as the result of completing the same inner join (as in the case of the definition of left and right outer joins) with non-joinable tuples of both the left and right initial operand relations. Based on this definition, we give the formulary form of this definition: r1(S1) ↔x P r2(S2) = (r1 →x P r2)∪(r1 ←x P r2); The full outer join operation also has a property similar to that of the left and right outer join operations. Only due to the original reciprocal nature of the full outer join operation (after all, it was defined as the union of left and right outer join operations), it performs commutativity property: r1(S1) ↔x P r2(S2)=r2(S2) ↔ x P r1(S1); And to complete the consideration of options for join operations, let's look at an example illustrating the operation of a full outer join operation. We introduce two relations r1(S1) and r2(S2) and the join condition. Let r1(S1)

r2(S2):

And let the condition of connection of relations r1(S1) and r2(S2) will be: P = (b1 = b2), as in the previous examples. Then the result of the full outer join operation of relations r1(S1) and r2(S2) by the condition P = (b1 = b2) there will be the following table: r1(S1) ↔x P r2(S2):

So, we see that the full outer join operation clearly justifies its definition as the union of the results of left and right outer join operations. The resulting relation of the inner join operation is complemented by simultaneously non-joinable tuples as the left (first, r1(S1)), and right (second, r2(S2)) of the original relation-operand. 5. Derivative operations So, we have considered various variants of join operations, namely the operations of inner join, left, right and full outer join, which are derivatives of the eight original operations of relational algebra: unary operations of selection, projection, renaming and binary operations of union, intersection, difference, Cartesian product and natural connection. But even among these original operations there are examples of derivative operations. 1. For example, operation intersections two ratios is a derivative of the operation of the difference of the same two ratios. Let's show it. The intersection operation can be expressed by the following formula: r1(S)∩r2(S) = r1 \r1 \r2 or, which gives the same result: r1(S)∩r2(S) = r2 \r2 \r1; 2. Another example of a base operation derived from eight original operations is the operation natural connection. In its most general form, this operation is derived from the binary operation of the Cartesian product and the unary operations of selecting, projecting, and renaming attributes. However, in turn, the inner join operation is a derivative operation of the same operation of the Cartesian product of relations. Therefore, to show that the natural join operation is a derivative operation, consider the following example. Let's compare the previous examples for natural and inner join operations. Let us be given two relations r1(S1) and r2(S2) that will act as operands. They are equal: r1(S1):

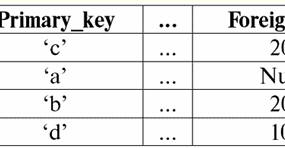

r2(S2):

As we have already received earlier, the result of the natural join operation of these relations will be a table of the following form: r3(S3) ≔ r1(S1)xr2(S2):

And the result of the inner join of the same relations r1(S1) and r2(S2) by the condition P = (b1 = b2) there will be the following table: r4(S4) ≔ r1(S1)x P r2(S2):

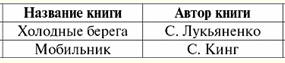

Let us compare these two results, the resulting new relations r3(S3) and r4(S4). It is clear that the natural join operation is expressed through the inner join operation, but, most importantly, with a join condition of a special form. Let's write a mathematical formula that describes the action of the natural join operation as a derivative of the inner join operation. r1(S1)xr2(S2) = { ρ<ϕ1>r1 x E ρ< ϕ2>r2}[S1 ∪S2], where E - connectivity condition tuples; E= ∀a ∈S1 S2 [IsNull(b1) & IsNull(2) ∪b1 = b2]; b1 = ϕ1 (name(a)), b2 = ϕ2 (name(a)); Here is one of renaming functions ϕ1 is identical, and another renaming function (namely, ϕ2) renames the attributes where our schemas intersect. The connectivity condition E for tuples is written in a general form, taking into account the possible occurrence of Null-values, because the inner join operation (as mentioned above) is a derivative operation from the operation of the Cartesian product of two relations and the unary selection operation. 6. Expressions of relational algebra Let us show how the previously considered expressions and operations of relational algebra can be used in the practical operation of various databases. Let, for example, we have at our disposal a fragment of some commercial database: Suppliers (Supplier code, Vendor name, Vendor city); Tools (Tool code, Tool name,...); Deliveries (Supplier code, part code); The underlined attribute names[1] are key (i.e., identifying) attributes, each in its own relation. Suppose that we, as the developers of this database and the custodians of information on this subject, are ordered to obtain the names of suppliers (Supplier Name) and their location (Supplier City) in the case when these suppliers do not supply any tools with a generic name "Pliers". In order to determine all the suppliers that meet this requirement in our possibly very large database, we write a few expressions of relational algebra. 1. We form a natural connection of the "Suppliers" and "Supplies" relationships in order to match with each supplier the codes of the parts supplied by him. The new relation - the result of applying the operation of natural join - for the convenience of further application, we denote by r1. Suppliers x Supplies ≔ r1 (Supplier Code, Supplier Name, Supplier City, In parentheses, we have listed all the attributes of the relations involved in this natural join operation. We can see that the "Vendor ID" attribute is duplicated, but in the transaction summary record, each attribute name should appear only once, i.e.: Suppliers x Supplies ≔ r1 (Supplier code, Supplier name, Supplier city, Instrument code); 2. again we form a natural connection, only this time the relationship obtained in paragraph one and the relationship Instruments. We do this in order to match the name of this tool with each tool code obtained in the previous paragraph. r1 x Tools [Tool Code, Tool Name] ≔ r2 (Supplier Code, Supplier Name, Supplier City, The resulting result will be denoted by r2, duplicate attributes are excluded: r1 x Tools [Tool Code, Tool Name] ≔ r2 (Supplier code, Supplier name, Supplier city, Instrument code, Instrument name); Note that we take only two attributes from the Tools relation: "Tool Code" and "Tool Name". To do this, we, as can be seen from the notation of the relation r2, applied the unary projection operation: Tools [Tool code, Tool name], i.e., if the relation Tools were presented as a table, the result of this projection operation would be the first two columns with the headings "Tool code" and "Tool name" respectively ". It is interesting to note that the first two steps that we have already considered are quite general, that is, they can be used to implement any other requests. But the next two points, in turn, represent concrete steps to achieve the specific task set before us. 3. Write a unary selection operation according to the condition <"Tool name" = "Pliers"> in relation to the ratio r2obtained in the previous paragraph. And we, in turn, apply the unary projection operation [Supplier Code, Supplier Name, Supplier City] to the result of this operation in order to get all the values of these attributes, because we need to get this information based on the order. So: (σ<Tool name = "Pliers"> r2) [Supplier Code, Supplier Name, Supplier City] ≔ r3 (Supplier code, Supplier name, Supplier city, Tool code, Tool name). In the resulting ratio, denoted by r3, only those suppliers (with all their identification data) turned out to supply tools with the generic name "Pliers". But by virtue of the order, we need to single out those suppliers who, on the contrary, do not supply such tools. Therefore, let's move on to the next step of our algorithm and write down the last relational algebra expression, which will give us the information we are looking for. 4. First, we make the difference between the "Suppliers" relationship and the relationship r3, and after applying this binary operation, we apply the unary projection operation on the "Supplier Name" and "Supplier City" attributes. (Suppliers\r3) [Supplier Name, Supplier City] ≔ r4 (Supplier code, Supplier name, Supplier city); The result is designated r4, this relation included just those tuples of the original "Suppliers" relation that correspond to the condition of our order. So, we have shown how, using expressions and operations of relational algebra, you can perform all kinds of actions with arbitrary databases, perform various orders, etc. Lecture No. 6. SQL language Let us first give a little historical background. The SQL language, designed to interact with databases, appeared in the mid-1970s. (first publications date back to 1974) and was developed by IBM as part of an experimental relational database management system project. The original name of the language is SEQUEL (Structured English Query Language) - only partially reflected the essence of this language. Initially, immediately after its invention and during the primary period of operation of the SQL language, its name was an abbreviation for the phrase Structured Query Language, which translates as "Structured Query Language". Of course, the language was focused mainly on the formulation of queries to relational databases that is convenient and understandable to users. But, in fact, almost from the very beginning, it was a complete database language, providing, in addition to the means of formulating queries and manipulating databases, the following features: 1) means of defining and manipulating the database schema; 2) means for defining integrity constraints and triggers (which will be mentioned later); 3) means of defining database views; 4) means of defining physical layer structures that support the efficient execution of requests; 5) means of authorizing access to relations and their fields. The language lacked the means of explicitly synchronizing access to database objects from the side of parallel transactions: from the very beginning it was assumed that the necessary synchronization was implicitly performed by the database management system. Currently, SQL is no longer an abbreviation, but the name of an independent language. Also, at present, the structured query language is implemented in all commercial relational database management systems and in almost all DBMS that were not originally based on a relational approach. All manufacturing companies claim that their implementation conforms to the SQL standard, and in fact the implemented dialects of the Structured Query Language are very close. This was not achieved immediately. A feature of most modern commercial database management systems that makes it difficult to compare existing dialects of SQL is the lack of a uniform description of the language. Typically, the description is scattered throughout various manuals and mixed with a description of system-specific language features that are not directly related to the structured query language. Nevertheless, it can be said that the basic set of SQL statements, which includes statements for determining the database schema, fetching and manipulating data, authorizing data access, support for embedding SQL in programming languages, and dynamic SQL statements, is well-established in commercial implementations and more or less conforms to the standard. . Over time and work on the Structured Query Language, it has been possible to achieve a standard for a clear standardization of the syntax and semantics of data retrieval statements, data manipulation, and fixing database integrity constraints. Means have been specified for defining the primary and foreign keys of relationships and so-called integrity check constraints, which are a subset of immediately checked SQL integrity constraints. The tools for defining foreign keys make it easy to formulate the requirements of the so-called referential integrity of databases (which we will talk about later). This requirement, common in relational databases, could also be formulated on the basis of the general mechanism of SQL integrity constraints, but the formulation based on the concept of a foreign key is simpler and more understandable. So, taking into account all this, at present, the structured query language is not just the name of one language, but the name of a whole class of languages, since, despite the existing standards, various dialects of the structured query language are implemented in various database management systems, which, of course, have one common basis. 1. The Select statement is the basic statement of the Structured Query Language The central place in the SQL structured query language is occupied by the Select statement, which implements the most demanded operation when working with databases - queries. The Select operator evaluates both relational and pseudo-relational algebra expressions. In this course, we will consider the implementation of only the unary and binary operations of relational algebra that we have already covered, as well as the implementation of queries using the so-called subqueries. By the way, it should be noted that in the case of working with relational algebra operations, duplicate tuples may appear in the resulting relations. There is no strict prohibition against the presence of duplicate rows in relations in the rules of the structured query language (unlike in ordinary relational algebra), so it is not necessary to exclude duplicates from the result. So let's look at the basic structure of the Select statement. It is quite simple and includes the following standard mandatory phrases: Select ... From... Where... ; In place of the ellipsis in each line should be relations, attributes and conditions of a particular database and tasks for it. In the most general case, the basic Select structure should look like this: Select select some attributes from from such a relationship Where with such and such conditions for sampling tuples Thus, we select attributes from the relationship scheme (headings of some columns), while indicating from which relationships (and, as you can see, there may be several) we make our selection and, finally, on the basis of what conditions we stop our choice on certain tuples. It is important to note that attribute references are made using their names. Thus, the following is obtained work algorithm this basic Select statement: 1) the conditions for selecting tuples from the relation are remembered; 2) it is checked which tuples satisfy the specified properties. Such tuples are remembered; 3) the attributes listed in the first line of the basic structure of the Select statement with their values are output. (If we talk about the tabular form of the relationship, then those columns of the table will be displayed, the headings of which were listed as necessary attributes; of course, the columns will not be displayed completely, in each of them only those tuples that satisfied the named conditions will remain.) Consider an example. Let us be given the following relation r1, as a fragment of some bookstore database: